What the "Cobra Effect" Says About API Metrics

Net API Notes for 2023/09/27, Issue 223

Ope! At some point, I blinked, and suddenly, October is here! That means, much like many of you, my 2024 planning is right around the corner. That also means, regardless of whether you work on a team responsible for an individual API or oversee a suite of integrations, you're thinking about metrics - how to quantify your success thus far, how those numbers compare to your peers, and what you want to change about KPIs heading into next year.

Peter Drucker, one of the figureheads of modern management theory, often said, "You can't manage what you can't measure" - hence all that hoo-ha over metrics during any planning effort. However, finding meaningful measurements of an API's contribution to business objectives can be hard. And even if you do arrive at a plausible metric, you run the risk of a phenomenon called proxy failure.

Goodhart's law, which you might be familiar with, is an adage that says, "When a measure becomes a target, it ceases to be a good measure". Why is that? What forces do we need to be aware of when measuring API success? Are all metrics doomed to be gamed?

I attempt to answer those questions (and more) in this edition of Net API Notes.

The Venn Diagram of Proxies, Cobras, and APIs May Surprise You

When I hear the word 'proxy' around APIs, I think of things like API gateways. However, when talking about 'proxy failure', the proxy in question are those metrics that stand ins for actual business objectives. A proxy for developer performance, albeit bad, would be "lines of code produced in a day".

There are several reasons why we use proxies. Sometimes we may not know how our work contributes to the top-level organizational goal, especially something necessary but long-lived and obscure like "digital transformation". Proxies provide direct incentives that are (1) close in time, (2) measurable, and (3) personally relevant. It is easier to discuss a proxy, like "number of new API integrations I did" during the annual performance review, rather than "My role in bringing the company together as one".

A proxy is a metric that stands in for an actual goal. But it is in that abstraction where problems can occur. The best illustration of how proxy failure can occur is with a story.

The Cobra Effect Is An Example of Proxy Failure

During British rule in Delhi, India, the colonial government (regulators in a system) wanted to make the city safer (a goal, albeit subjective). Their chief concern was the city's large number of venomous cobras. To reduce the snake population, they offered a bounty for every dead cobra (an easily measurable proxy contributing to the goal). Initially, this seemed successful, as many skins were turned in for the reward.

However, some enterprising individuals (actors in the system) realized they could profit from this scheme by breeding cobras. They began raising snakes to kill for the bounty. When the regulators became aware of this, they discontinued the bounty program. With no incentive to keep or kill the cobras they were breeding, the actors released the snakes, leading to an even larger cobra population than before the bounty was introduced (a result opposite of the initially intended goal).

The Wrong API Metric Also Risks Proxy Failure

I experienced a similar situation while leading an API Center of Excellence (CoE) for a large enterprise environment. From the CEO to executive management across each business unit, the goal was to become more innovative and agile throughout our technology execution. Creating a healthy, continuously improving API culture (another goal) was an essential piece of the larger strategic plan.

Something needed to be shared during town halls and annual all-hands to demonstrate progress. What became common was at least one slide showing the Number of New APIs for the given time frame compared and contrasted with the other business units. Did you make the most APIs last year? Congratulations, your unit is the pacesetter! Are you lagging behind? Too bad, it looks like you have some work to do (oh, and your percentage of spoils to divvy out as bonuses will be adjusted accordingly)!

Number of New APIs had become a proxy for more innovative and agile technology development in this environment.

As you might imagine, the actors in the system were highly motivated to focus expressly on gaming the proxy rather than the larger system goals. The more APIs a team could create, the better their division would appear compared to others. Submissions for API review took a turn toward the infinitesimal. My COE team probed the use case viability and worked to create sensible boundaries. However, they were often challenged by groups (particularly VPs) who believed they were practicing "microservice architecture", where the primary benefit was seen as "more services".

The proxy had failed. Rather than increased innovation and agility, consumers of these fine-grained services had to work harder. They had to manage more keys, cross-correlate more documentation, and endure more comprehension overhead while putting humpty dumpty back together again.

Realizing our mistake, we began working on other alternatives to Number of New APIs. There were some false starts, while others showed promise. But having lived through a proxy failure, I'm keen to avoid a repeat experience in the future.

The First Step To Better Proxies Is Admitting They're Not The Goal

"Dead Rats, Dopamine, Performance Metrics, and Peacock Tails: Proxy Failure is an Inherent Risk in Goal-Oriented Systems" is a frontrunner for the most imaginatively named paper. The piece by Yohan John, Leigh Caldwell, Dakota McCoy, and Oliver Braganza also attempts to identify why proxy failures are common across contexts as diverse as economics, academia, machine learning, and ecology. Their sobering conclusion is that proxy failures are an inherent risk in any complex, goal-oriented system.

In our year-end API planning, nobody intends to mimic the McNamara fallacy or create the kind of environmental incentives that led to the Wells Fargo cross-selling scandal. Assuming positive intent, I suspect everyone is attempting to do the right thing; enterprise strategic goals are often just too large, subjective, and/or long-lived to provide meaningful guidance during daily decision making otherwise. We must use proxies. But there are some steps we can take to avoid the worst of proxy failures.

First, as the paper authors point out, "whenever proxies do not perfectly capture the goal [which would be all of them, at least as I'm using the term here], a reduction in the reward for the proxy is warranted". In my Number of New API example, the quantity of APIs was useful to create conversations and demonstrate positive uptake across the enterprise. But it shouldn't have been tied (either implicitly or explicitly) to bonus payouts. Those should have remained laser-focused on demonstrated business impact.

Second, the paper suggests that "whenever there is some additional selection or optimization pressure that operates on the regulator itself, proxy divergence is constrained". Stated another way, those creating the proxy metrics must be vigilant and regularly evaluate whether the proxy is achieving its goals. Virtuous feedback loops are a good thing and apply to improving our proxies, too. Even better if processes and controls build-in regular re-evaluation.

Third, don't be afraid to discard proxies when they no longer serve their intended purpose. Changing proxies becomes more complicated when they become "baked-in" to enterprise reporting dashboards or executive readout cadences. While management may desire stability for the ability to do "apples-to-apples" comparisons, this risks the calcification of the proxy, leaving them at the forefront of initiatives long after the proxy has ceased being a good metric. However, discarding may be necessary when the costs of the proxy outweigh the benefits.

The paper has several additional concepts that go deeper into what happens with proxies. These include phenomena like "proxy treadmills", "proxy cascades", and "proxy appropriation". If you're responsible for setting the metrics for your area in the coming year, I'd suggest you to check it out. At the very least, brainstorm how your proxy may be "hacked", and spend a few cycles considering how you might detect when it begins happening.

Milestones

- Oh Kong, no. No, no, no, no, NO!

- Tyk is kicking off a 7-week free program entitled "API platform engineering fundamentals". The entirely online course started on September 28th. However, sessions are also available asynchronously, so people can attend as they are able.

- Leonard Richardson, the co-author of RESTful Web APIs and the namesake of "Richardson's Maturity Model", is looking for new opportunities starting in December. If you are a mission-oriented org (involved in publishing, freedom of information access, or climate issues) and want to work with a legend, slide into his DMs already.

Wrapping Up

I'll end this newsletter with a special thank you to my paid subscribers. With their help, this effort remains free of advertisements, information selling, or paywalls.

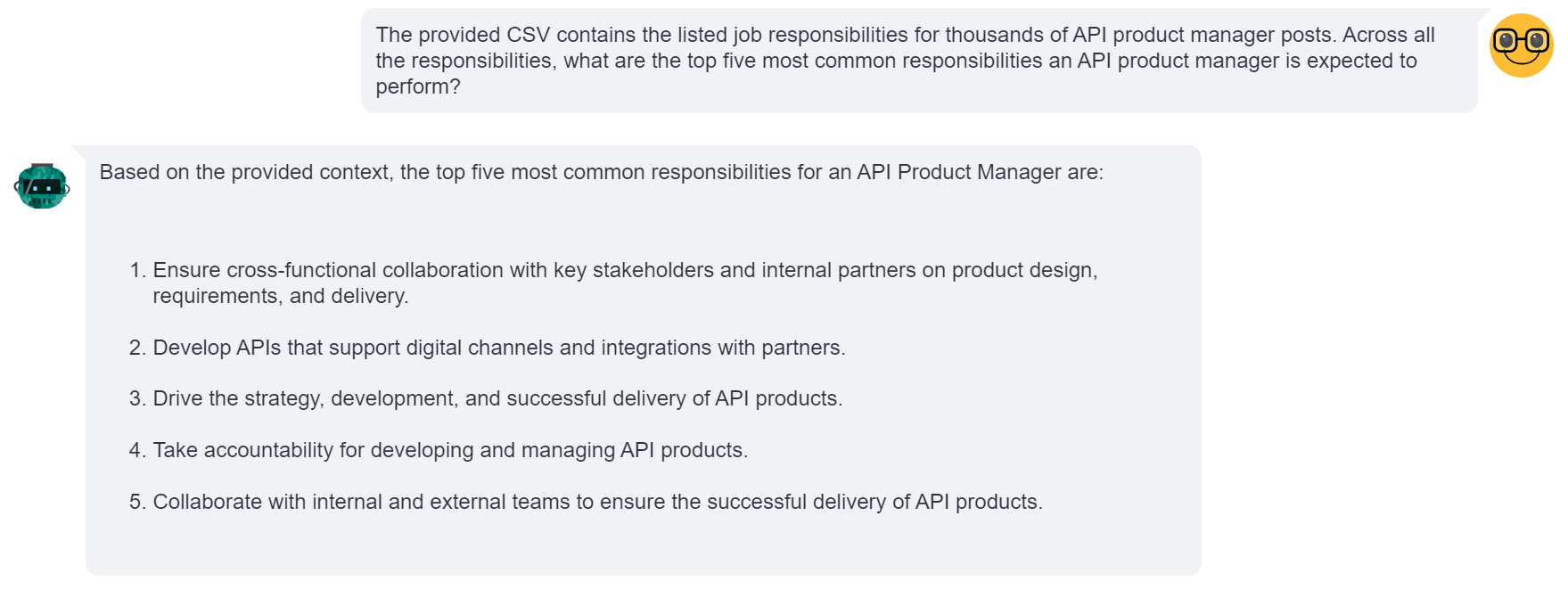

Further, the paid subscribers' donations help cover the expenses of building out a historical database of API-related job listing information. This is going to be a tremendous amount of ongoing work. However, as I continue to work through the thousands of data points I've assembled, there are already opportunities to do NLP and machine learning analysis to discover important insights. If nothing else, it was a great excuse to re-install Python on my lappie. There are several MB of historical data that I will be adding shortly that will be useful for some trend analysis. But that is a note for another day.

If you'd like to become a paid subscriber, head over to the subscription page. For more info about what benefits paid sponsorship includes, check out the this newsletter's 'About' page.

Till next time,

Matthew (@matthew on the fediverse and matthewreinbold.com on the web)